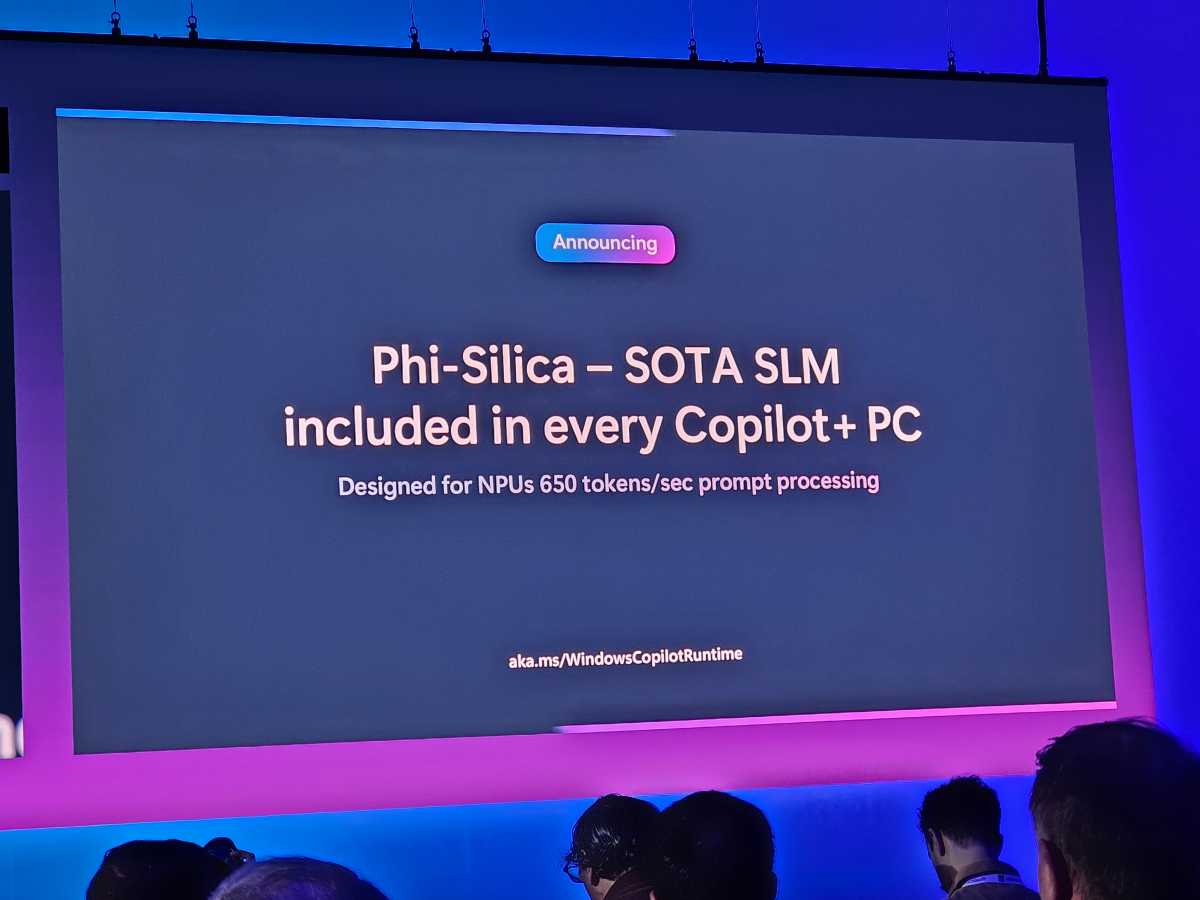

In 2023, Microsoft was a big believer in large language models, running in the cloud. At Microsoft Build, the company launched Phi Silica, a small language model designed to run specifically on the NPUs in new Copilot+ PCs.

In April, Microsoft announced Phi-3-mini, a model small enough to run on a local PC. Phi Silica is a derivative of Phi-3-mini, designed specifically to run on the Copilot+ PCs that Microsoft announced Monday.

Most interactions with AI take place in the cloud; Microsoft’s existing Copilot service, even on your PC, talks to a Microsoft remote server. A cloud-based service like Copilot is known as a large language model (LLM), with billions of parameters that increase the accuracy of Copilot’s answers.

Mark Hachman / IDG

But Microsoft’s upcoming Recall PC search engine, which depends on the NPU, runs locally for privacy’s sake. Users don’t want Recall indexing incognito or anonymous searches. An LLM simply requires too much memory and storage space to run on a typical PC. That’s the justification for small language models, or SLMs.

Microsoft has said that Recall and other AI will depend on these sorts of SLMs. Phi Silica uses a 3.3 billion parameter model, which Microsoft has fine-tuned for both accuracy and speed, even with the smaller language model.

“Windows is the first platform to have a state-of-the-art small language model (SLM) custom built for the NPU and shipping inbox,” Microsoft said in a blog post.

Executives also referred to Phi Silica as an “out of the box” solution,” however, which raises the question of whether the SLM will ship on Copilot+ PCs themselves, as a local version of Copilot. Microsoft has yet to answer that question, but it has until June 23 to do so, when Copilot+ PCs begin shipping.