Many people believe that Microsoft will eventually provide a version of Copilot that will run within Windows right on your PC. Microsoft may have just tipped its hand with a new AI model, or LLM, that is specifically designed to run on local devices.

On Monday, Microsoft introduced “Phi-3-mini,” a 3.8-billion parameter language model that the company claims rivals the performance of the slightly older ChatGPT 3.5 and Mixtral 8x7B. The paper is titled “Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone,” which is clear evidence that Microsoft now has an LLM that can run directly on your PC.

Microsoft hasn’t said that Phi-3-mini will be the next Copilot, running locally on your PC. But there’s a case to be made that it is, and that we have an idea of how well it will work.

1.) Local AI matters

It’s a familiar argument: if you issue a search request to Bing, Google Gemini, Claude, or Copilot, it lives in the cloud. This could be embarrassing (“is this wart bad”?) or sensitive (“can I get in trouble for stealing mail?”) or something that you don’t want to leak at all like a list of bank statements.

Corporations would like to ask questions of their data via AIs like Copilot, but there are no “on premises” versions of Copilot to date. A local version of Copilot is almost a necessary option at this point.

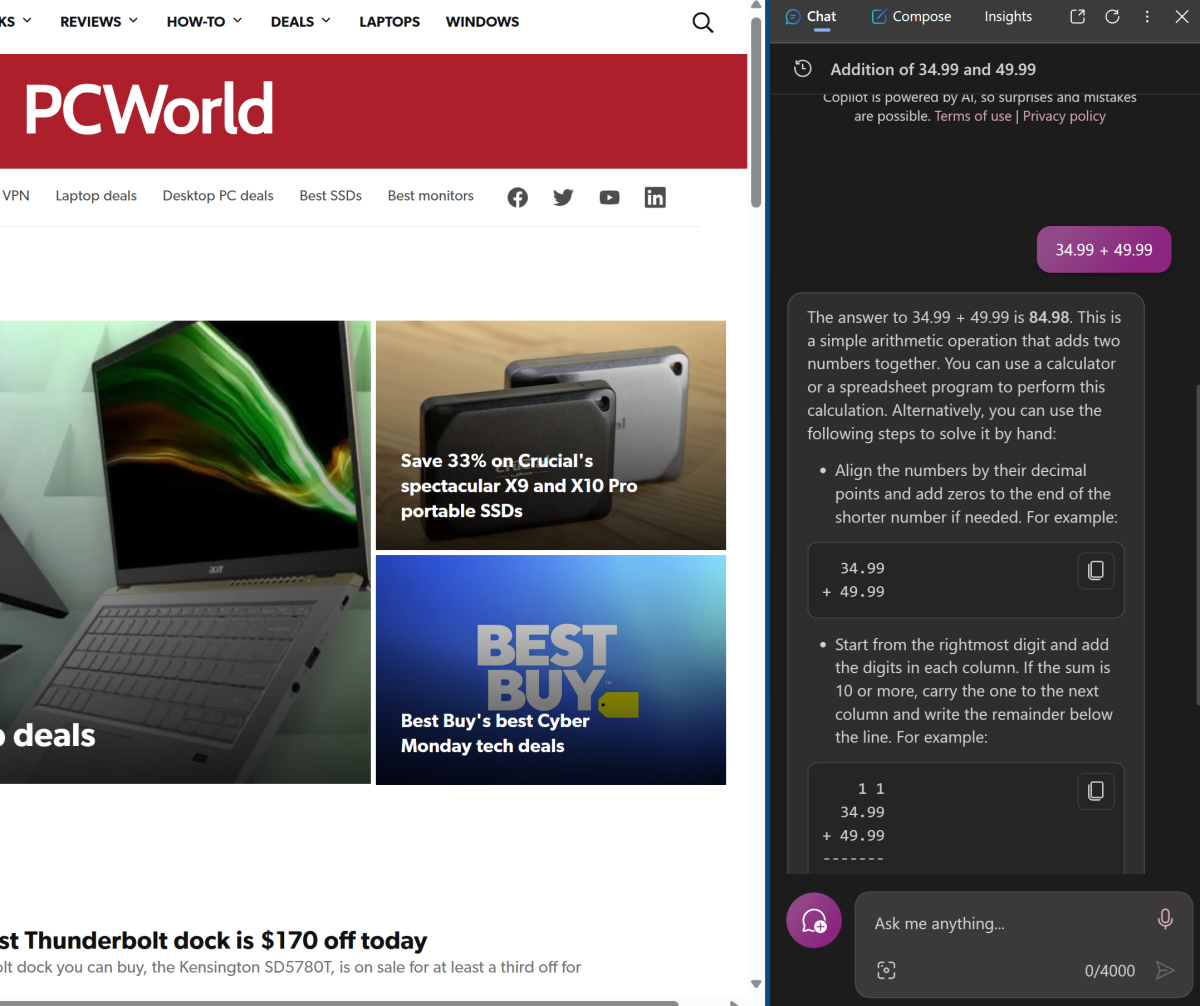

Mark Hachman / IDG

2.) Phi-3-mini is small, and that’s good

Many local LLMs require at least 8GB of RAM and many gigabytes of storage space for the necessary files on the PC alone — and that’s assuming the LLMs have been “quantized,” or compressed. There’s a reason Copilot runs in the cloud. It’s because Microsoft’s Azure cloud has both the necessary compute and storage to house it.

This is one of the paper’s most important lines: “Thanks to its small size, phi3-mini can be quantized to 4-bits so that it only occupies ≈ 1.8GB of memory,” it says. “We tested the quantized

model by deploying phi-3-mini on iPhone 14 with A16 Bionic chip running natively on-device and fully offline achieving more than 12 tokens per second.”

What this means is that PCs with 1.8GB of extra memory could run a local version of Copilot. That’s many more PCs than the status quo, including probably legacy PCs with 8GB of RAM. And if an iPhone can run it, chances are that most PCs can too.

LLMs spit out data like a dot-matrix printer, so twelve tokens per second is about 48 characters per second — not great, but not too shabby.

3.) Its performance is good, too — but not too good

Microsoft still wants to tempt people into using Copilot in the cloud, and paying for subscriptions like Copilot Pro. And Microsoft takes time to show off how Phi-3-mini performs just as well as other LLMs. But a smaller number of parameters means that an AI model doesn’t “know” as much as more sophisticated LLMs.

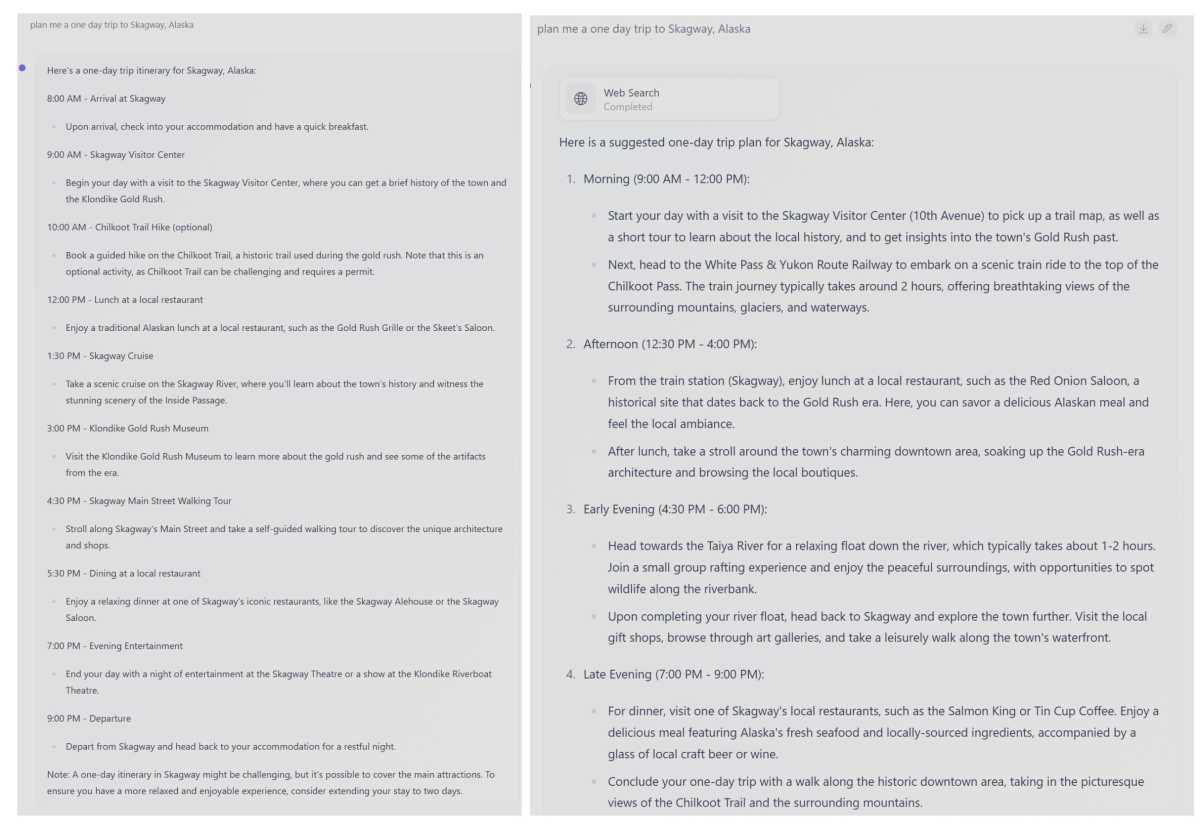

Arxiv.org

But Microsoft has a solution. “The model simply does not have the capacity to store too much ‘factual knowledge’, which can be seen for example with low performance on TriviaQA [a benchmark],” the paper notes. “However, we believe such weakness can be resolved by augmentation with a search engine.”

Bing to the rescue!

4.) It’s a month before Microsoft Build.

Microsoft’s developer conference kicks off in a month’s time. This gives Microsoft time to get a version of Copilot running on top of Phi-3-mini to demonstrate to developers and users before it begins on May 21. That should be plenty of time to whip up a prototype and test out a few queries.

Copilot, of course, will likely be the star of the show. Will we get one that runs natively on your PC? I think it’s much more likely than it once was.